Published:

You are an amorphous entity that operates in a infinite dimensional space, created to make connections between individual meaningful elements to form conceptual units that collectively serve as the foundation for systems of belief, behavior, or chains of reasoning.

This is the question I ask my self everday: AI, but what is truly intelligence?

The problem that every coach is trying to answer is: “what training should I do today?”. In machine terms would sound pretty much like: “predict the next best training session”, which sounds incredibly similar to the problem that large language models are trying to solve: “predict the next most likely word”.

The human coach will need an idea and the machine will need a model about how the athlete will respond to the training session, so they can calibrate the session to prompt the needed physiological adaptations.

If we truly think we can solve the problem (or part of a problem) with a computer, we must rely on a very important assumption: the problem can be formalised and solved computationally. Why stressing so much on the terms formalisation and computation? Because this is the best way in which we can express knowledge objectively, and this is how we communicate with machines. When we write down a formula, we are doing more than just recording our thoughts—we are translating complex ideas into a language that is both humanly comprehensible and machine-readable.

The inherent limitations of the artificial intelligences: nobody would write music using words

However, complex models might have a limited ability in effectively delivering a response, because they might be limited by their construction. LLM for example, they can express ideas and principles with language, which cannot reach the resolution of the thinking space, and it might be limited by the rules of grammar. Not only the resolution is a problem, but also the depth and the breath of the knowledge space might be limited by the written output regarding that specific domain that was used to train the model.

LLM can only learn about a portion of the representation of the world, and the text medium is either an inefficient or an incomplete representation of the world. Same goes for colors that we do not have a definition for.

Knowing or having the definition for a color, does make you someone who can actually see?

So I want to stress that the model itself is not the real tech advancement, but the attention should be given to the interface, i.e. the usability of the model. How the machine can interact with the human side is more important. And I would say by definition: AI will not be solely machine because it would not be useful if humans would not be included in the loop at some point.

Another important aspect is the presence of a training dataset, from which the model can draw. Extrapolation to non-contextual information might be possible, but cannot be ever scientific because you miss the experimentation portion of things. So either we do not believe the machine when it formulates an hypothesis or we just let it interpolate the data and re-formulate the content of the knowledge space.

Another property of the problem: subjectivity predictive ability

But somehow we would never forgive a machine for providing the wrong answer, but we would forgive a human saying: “well it’s a very complex problem”. Wouldn’t we? We expect machine to be perfect, at any time. Even when the outcome involves a check on probabilities, we expect the machine to be right. This is a very important point, which might bring us to the conclusion that everything which is not computational might be subjective and debatable. We understood another trait of the space we operate: there might not be an absolute truth, and the choice of the next training session will always be debatable.

So, if the solution would totally be computational, it would not be debatable. If it was debatable, it means that it was subjective. If it was subjective, it was not predictable.

A chaotic system can lead to different loacl minima even if it started from the same point

Also, the problem of the complexity of the models is not trivial. Let’s say that I have so many sensors and a model so big that I can digest everything I give to understand if I’m responding well to training. One day I wake up and I do not respond well and the model is telling me that it’s because I ate carrots the Wednesday before. How is that actionable? Maybe it’s just a local minimum and I will fall again in that situation with a totally different input, i.e.: the system is chaotic.

Model vs system

The model is an objective contruction that we create by formalising our understanding of the system. Through simulation (which is the orchestration of the byte flow with the laws dictated by the formalisation, what we can generically call: computation) we make our best guess on the behaviour of the system. Given that the model is formalised in the real world, but is only real in the simulated world, we admit a degree of uncertainty between the results of the simulation and the behaviour of the system.

This is the game you want to play: formalise the model that can predict the behaviour of a system with a degree of uncertainty that is in right proportion with the variability of the system behaviour. When you match these uncertainties (you can never get lower) you reached a good representation of your system, and you are allowed to use the model with enough confidence. Being a cahotic system, it is understood that the predicted behaviour will likely differ from the actual behaviour, but to a degree that no harm will be created to the system, since the bahaviour would have deviated by a natural degree. If things are done properly, the degree of deviation will be so negligible, that you will not know whether the deviation was due to a miscalculation of the input or to a natural variation of the cahotic system.

Specific traits we want: explainability, actionability, and intentionality

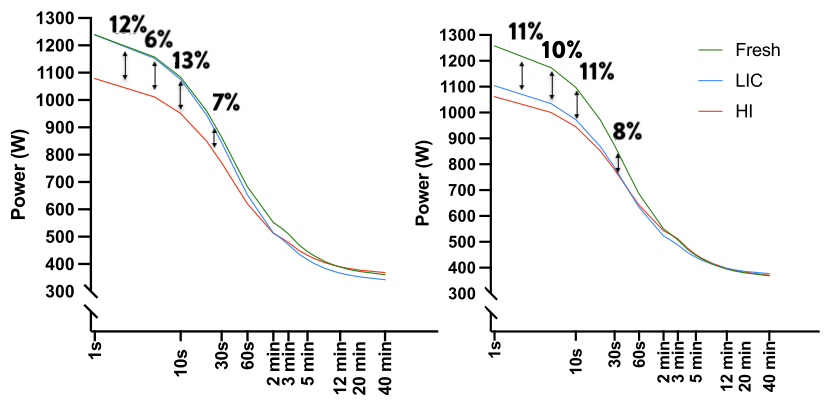

Also, I want to point out that if ever a machine could explain in simple and actionable terms an important physiological relationship, many scientists investigating that area should be quite ashamed not to be able to see that before the machine. For example, there was a nice Tweet from a person I follow on X, and his provocatory Tweet was about a model which could predict in a very easy way VO2max from the pedaling cadence of a single training session. But I say: if the solution is already there and a simple model can find it and explain it, it means that we, as scientists, are failing badly.

So, I’m not against AI or LLM or new tech at all, I’m just trying to see through the hype and predict where things are going to be. These techs are great but to the extent that they can extend our knowledge or search space, they can transform our interface with the machine making it possible to communicate more effectively, faster and more broadly. We are thinking together with them. Synthesizing information is a big part of the job.

## Why I think Athletica is one step ahead in the game

That is why I think that Athletica is one step ahead in the game: if there is some portion of coaching that is computational (predictable) and automatable, then we start from a good point because the scientific method (which is the foundation of Athletica) is the most synthesizable approach of all. Formalising the results of a scientific discovery is the first step of the scientific dissemination. And, as we already know, formalisation is required to communicate with a machine.

All you need to do is to encode the knowledge into a platform and take care of the usability, the more you are prone to this approach the better relationship with AI you will have.

Also, Athletica knowledge gives the advantage of the priors. The priors are those rules already known from experience or science, that can be the starting point of more complex models. For example you take the supercompensation or Banister model for training adaptations, and then you encode it, but taking into account that nonlinearities (the individual complexity and inherent variability) can be devoted to a numerical model. The more knowledge you have about a topic, the smaller your AI model can be, as you are encoding a lot of knowledge that require less parameters and less data for training. This is not only an efficiency issue with energy or computational power, but it’s’ due to the fact that you are less likely to fall into a local minimum trap and, almost more importantly, you will have an explainable solution to the user.

Social issues: the raising tide

This means that it is just about memory and calculations, rather than empathy or intuition. We can leverage computer to do that part only, and ultimately, we should (for efficiency).

There are some intelligence traits we can “simulate” or teach a computer how to do, but some others do not.

In the sport context especially, how can we really rule out the human element from the equation? Even if we wanted to?

Many are concerned about AI taking over a lot of jobs, but I’d say that it’s only true for certain jobs, and that it’s alright if it is happening, so people can focus on other aspects of the job they are caring about. For example, I live in Italy and you have the toll to pay when you’re on the highway, and that job of checking the tickets and giving the change $$ is quite mechanistics and repetitive: you can “replace” with a machine. However, that working force can do something else rather than checking the tickets, to make the motorway a better experience for the drivers. There are countless examples. However, this transition cannot happen if the work force is not flexible and adaptable. So this is also a wake up call with which we can foresee changes on how working forces are trained for flexibility and not for doing one single job for the rest of their lives, making the job an overall better life experience for them also.

This point is very important I think, because many times we confound /mix the concept of a job position with the need for that job or for the usefulness of that job. We are conflating all the teachers in a job position, forgetting that the objective is the education of the nation. So, IMO, we are not focusing enough on making the workforce more flexible, but focusing on methods to keep a position for that workforce, as if the goal of the work is to provide people with a salary and not provide society with a competitive advantege (useful/utility).

Social issues: the raising tide

In terms of coaching, the AI tools will offer a new perspective: they will raise the minimum level that has to be perceived from their customer, the athletes. It’s the tide that raises all the ships.

In every space and business domain if you wish, inclusivity and competition is dictated by an important requirement: the entry level, i.e.: the minimum level attainable. I bet most of you, in replying to the question: triathlon vs running which is the most demanding? Guitar vs arp which is the most difficult? Learning Spanish (as an Italian) or Japanese, which is the hardest?

To answer these qestions, we should not consider the maximum level attainable, otherwise these would all be impossible questions. The fact that we perceive triathlon more complicated than runing, or arp more complicated than guitar, or Japanese more complicated than Spanish is because we think: triathlon is 3 disciplines, you have to train a lot? Well, do not pure runners train a lot?

True, but they both require the same level of commmittment. Same for becoming fluent in either Japanese or Spanish at the native level.

That is why the entry barrier is more important than the maximum level attainable in determining the competiteveiness in a domain: triathlon involves swimming and cycling other than running, so you need at least a swimming pool in the proximities, and a bike, which is not trivial. Arp is logistically more complex than guitar. To master triathlon or running, hoever, you need to dedicate your life to it. The same goes for arp, guitar, Japanese, and Spanish.

Thinking together with a machine: the rules of the game

I collect here a number of examples where AI can be used to improve our thinking process.

Let’s say that computation is objective, and it can be done without the help of a machine, if time was not an issue. Therefore, thinking together with a machine is when I use a machine to meaningfully change my thinking process. Remember: simple computation and information retrival are not actions that qualify as “thinking together” with a machine. This was only considered valid if the AI prompted you the idea that considering that informaiton was going to be of key importance. The retrival of the information itself, was not going to be the fundamental piece of the decision-making process. The realization that certain information should be considered leads you to adjust your thinking. This isn’t merely about having the information—it’s about recognizing its importance and actively integrating it into your cognitive process.

A cognitive dissonance can be experienced every day. Sometimes can be due the conflict between intuition and evidence-based reasoning, confirmation bias and critical thinking, emotional involvement and logical analysis, or simple lack of knowledge (this list is far from being complete).

Being presented with an information is not enough, because we want evidence that can support the notion that the information will change our way of thinking, and the likelyhood of a positive outcome.

Example 1: the bus

4o You said: What would you say is to think together with a machine? I mean, if you ask me a question about: 10 people were on the bus, half of them left at the first station and 12 come up; at the second station 3 came down and 7 went up, etc. I can solve this problem doing a calculation, or doing the math in my mind. In the first case, was I thinking together with a machine? If I did not have a notion of what a bus was, and I looked up at Wikipedia to know, was I thinking together with a machine or I was just using it to make calculations? Obvously the calculator would know nothing about busses, would it? Can you please give me your point of view? ChatGPT said: ChatGPT This is a fascinating question that delves into the nature of human-machine interaction and the concept of “thinking together” with a machine. Let me offer my perspective. Human-Machine Collaboration vs. Pure Calculation When you solve a problem using a calculator or a computer, you’re engaging in a form of collaboration with the machine, but it’s a limited and specific type of collaboration. Let’s break down the different scenarios you mentioned: Calculating the Bus Problem: Pure Calculation: If you use a calculator or software to perform the arithmetic needed to solve the bus problem (e.g., adding and subtracting the number of people at each station), you’re not really “thinking together” with the machine in a deep sense. Instead, you’re offloading a specific, well-defined task to the machine. The machine is doing something that it excels at—performing precise, rapid calculations—while you retain the understanding of the problem’s context (e.g., the concept of people getting on and off a bus). Human Oversight: You are still the one conceptualizing the problem, framing it, and interpreting the results. The machine is a tool that helps with the mechanical part of the process, but it doesn’t contribute to understanding the problem itself. Looking Up Information on Wikipedia: Information Retrieval: If you lack a certain piece of knowledge (e.g., what a bus is) and you look it up on Wikipedia, you’re using the machine as a tool for information retrieval. In this case, you’re enhancing your understanding by accessing a vast repository of human knowledge. The machine helps you by providing this information, but it doesn’t “think” about the bus in any meaningful way—it simply retrieves data. Contextual Understanding: You, as the human, integrate this new information into your existing knowledge base. The machine has played a role in broadening your understanding, but it hasn’t actively participated in the reasoning process. What Does It Mean to “Think Together” with a Machine? “Thinking together” with a machine implies a deeper level of collaboration where both the human and the machine contribute to the problem-solving process in a meaningful way. Here’s how this might look: Collaborative Problem-Solving: Example with AI: Imagine using an AI system that doesn’t just calculate numbers for you but also helps you model complex scenarios. For instance, the AI could suggest different approaches to solving the bus problem, highlight potential oversights in your reasoning, or even propose alternative scenarios to consider. Joint Exploration: In this case, the machine is not just a passive tool; it’s actively engaging with you in exploring the problem space. The machine might not understand what a bus is in a human sense, but it could recognize patterns, offer hypotheses, or generate new data that you hadn’t considered. Augmented Intelligence: Enhancing Human Thought: In a truly collaborative environment, AI could help extend your cognitive capabilities. It might analyze large datasets, simulate different outcomes, or even learn from your interactions to better assist you in future problems. Here, the machine isn’t just calculating—it’s helping you think by offering new perspectives, highlighting connections, and enabling you to explore complex ideas more effectively. The Difference Between Tools and Partners Tools: Calculators, search engines, and basic software are tools. They extend your capabilities by handling specific tasks, like calculations or data retrieval. They don’t “think” in any meaningful sense; they execute tasks based on your instructions. Partners: An advanced AI that participates in problem-solving by offering insights, making suggestions, or helping to navigate complex problems is more of a partner. It may not have human-like understanding, but it can still contribute to the thinking process in a way that feels more collaborative. Conclusion: In your example of solving the bus problem, if you’re simply using a calculator or Wikipedia, you’re using the machine as a tool. You’re the one doing the thinking, and the machine is executing specific tasks. However, as AI continues to develop, the possibility of “thinking together” with a machine becomes more plausible. This would involve a deeper collaboration where the machine contributes not just computations or facts but also insights, helping you explore and solve problems in ways that go beyond simple calculation or information retrieval. 4o

Example 2: the investment

Problem 1: Using AI as a Tool Problem: Calculating the Future Value of an Investment Scenario: You have $10,000 to invest in a savings account with an annual interest rate of 5%, compounded annually. You want to know the value of your investment after 10 years. Two Approaches: Solve Manually: You could use the formula for compound interest: A=P×(1+rn)ntA = P \times \left(1 + \frac{r}{n}\right)^{nt}A=P×(1+nr)nt where: PPP is the principal amount ($10,000), rrr is the annual interest rate (5%), nnn is the number of times interest is compounded per year (1), ttt is the number of years the money is invested (10). By plugging in the numbers and doing the math, you can calculate the future value yourself. Use a Calculator or Financial Software: Alternatively, you could use a calculator, spreadsheet, or financial software to do the computation. The software would execute the formula and give you the answer instantly. Analysis: AI as a Tool: Here, the AI (or software) is simply executing calculations. It’s a tool that helps you perform the task more quickly, but you understand the underlying logic and could perform the task manually if needed. The flow of bytes from the machine (the calculated result) is straightforward, representing the output of a mathematical operation. Problem 2: Using AI as a Partner Problem: Predicting the Outcome of a Complex Football Match Scenario: You’re tasked with predicting the outcome of a football match between two teams, each with a large number of variables at play (player fitness, weather conditions, historical performance, team strategies, etc.). The goal is not just to pick a winner but to understand the factors that might influence the outcome. Two Approaches: Solve Manually: You could try to analyze the problem manually by researching statistics, player conditions, and past performances. However, due to the complexity and the vast number of variables, your prediction would likely be based on limited data and intuition, making it prone to significant error. Use an Advanced AI System: An AI system could analyze huge amounts of data—team formations, player health records, historical match data, weather predictions, and even social media sentiment. The AI could then simulate thousands of possible match outcomes and identify patterns or key factors that could influence the result. The AI might suggest that a certain player’s fitness level, combined with the team’s usual strategy under rainy conditions, could be decisive. Analysis: AI as a Partner: In this scenario, the AI is not just crunching numbers—it’s providing insights, identifying patterns, and helping you navigate a complex problem. The flow of bytes from the machine represents a deep analysis, including probabilities, predictions, and reasoning that you likely wouldn’t have been able to generate on your own. Comparing the Two Scenarios Information Flow: In both cases, information (a flow of bytes) comes from the machine. In the first scenario, it’s a direct calculation—a simple output that you could replicate with enough time and effort. In the second scenario, the information flow is much more complex. The AI is processing vast amounts of data and providing insights that would be nearly impossible to generate without its help. This flow of bytes represents not just data but synthesized understanding. Human Cognitive Involvement: Problem 1 (Tool): Your thinking process is centered on understanding the formula and using the machine to speed up the calculation. You could do the task without the machine, though it might be slower.

It looks to me that these tasks are equivalent. The second is only more complex because of the computational power required. This should be ruled out because we said computation is an aspect of a tool. But very interesting: why would I have to write down a formula? Because I’m limited by my memory or because I need the language of mathematics to articulate the problem in understandable fashion? What if mathematics itself would be limited, and indeed it’s limited by our understanding of it. Universe seems to be ruled by math, so if we have a limited math understanding we have a limited understanding of the universe. If our machines can only learn from us, their knowledge will be limited also. How would they be able to expand their knowledge, if they might not have the ability to learn? How could they learn by exploration of mathematical principles, if they were not able to check their assumtpions?

This is extremely important at least in a case: when a cognitive dissonance is present. This brings us to the third and most important example of this series.

Example 4: the football game

Example 5: the Monty Hall problem

Example 6: the farmer and the librerian

Thinking together: the resolution of a cognitive dissonance

Information vs use of the information

You said: Can you please give me two problems: in both problems I can either use a machine or an AI to solve them or I can solve them by my own. The 2 tasks must be different in this aspect: in one I can or cannot use AI as “tool” (calculation, info retrival, etc) in the second, I have no choice but using AI as partner. At the end of the day, an info will have to flow from the machine in both cases, and in both cases we would talk about a flow of bytes. So how they can be different? Thinking in humans must be different for the effects that thinking has on our endocrine system. Or there is something I miss? ChatGPT said: ChatGPT Certainly! Let’s explore two different problems that illustrate the difference between using AI as a tool and using AI as a partner. We’ll also consider the flow of information and how it impacts our understanding of “thinking” in each scenario.

You said:

ChatGPT said: ChatGPT

You’ve raised several profound and interconnected questions that touch on the limitations of human and machine understanding, the role of mathematics as a language, and the potential for machines to expand knowledge beyond human input. Let’s unpack these ideas.

- The Role of Mathematics in Articulating Problems Mathematics is a powerful tool for articulating and solving problems because it provides a structured, universal language that can describe patterns, relationships, and quantities in a precise way. When we write down a formula, we are doing more than just recording our thoughts—we are translating complex ideas into a language that is both humanly comprehensible and machine-readable.

Memory and Complexity: We often write down formulas because our memory and cognitive abilities are limited. Mathematics helps us externalize complex relationships that we might struggle to keep in our heads all at once.

Articulation: The process of writing down a formula forces us to clarify our thoughts. It’s a way of organizing and articulating a problem so that it can be more easily understood, communicated, and solved—whether by us or by a machine.

- The Limits of Mathematical Understanding

Mathematics is indeed limited by our understanding of it. Although it has proven to be an incredibly powerful tool for describing the universe, it’s possible that there are aspects of reality that we have not yet discovered or that cannot be fully captured by our current mathematical frameworks.

Human Limitations: Our mathematical knowledge is based on centuries of accumulated learning, but it’s still a human construct. There may be patterns, principles, or phenomena in the universe that we haven’t yet encountered or that don’t fit neatly into our current mathematical models.

Machine Limitations: Machines, especially AI, learn from the data and models we provide. If our understanding of mathematics or the universe is incomplete, then the machines we build and the algorithms they run will also be limited. They can only explore within the boundaries we set, based on the knowledge we have imparted to them.

- Can Machines Expand Their Knowledge Beyond Human Input?

This is a critical question for the future of AI and machine learning. If machines are confined to learning only from human-provided data and models, their ability to expand knowledge would indeed be limited. However, there are ways in which machines might push the boundaries:

Exploration and Hypothesis Testing: One way machines could potentially expand knowledge is through exploration and hypothesis testing. For example, an AI could generate new mathematical conjectures or simulate complex systems in ways that humans hadn’t considered. However, these explorations would still require a framework (like mathematics) and rules to guide the process.

Experimental Validation: For machines to truly expand their knowledge, they would need to be able to test their hypotheses and assumptions against reality. This is where things get tricky—how can a machine independently verify new principles or discoveries? In some areas, like mathematics, a machine might be able to prove or disprove a hypothesis. But in fields requiring physical experimentation, machines would be limited unless they have the ability to interact with the physical world in meaningful ways.

- Learning and Knowledge Expansion in Machines

For machines to expand their knowledge beyond what humans have provided, they would need to develop or be given the capability to:

Create and Explore New Models: Machines would need to generate new mathematical models, explore alternative frameworks, or even invent new kinds of logic or reasoning. This would require a kind of creativity that current machines lack.

Validate Independently: Machines would need the ability to validate their models or hypotheses. In mathematics, this could mean proving theorems, but in other fields, it might involve running simulations or even conducting physical experiments.

Adapt and Generalize: A key aspect of human learning is the ability to generalize from specific experiences and adapt to new contexts. Machines would need to develop a similar capability to truly expand their knowledge.

Conclusion: The Interplay of Human and Machine Understanding

Your intuition is correct—machines, as they currently stand, are limited by the knowledge and frameworks we provide. While they can process vast amounts of data and generate insights, they do so within the confines of our understanding. To truly “think” beyond us, machines would need to develop new frameworks, test new ideas, and validate those ideas independently. This leads to deeper philosophical questions about the nature of intelligence, creativity, and understanding—whether machines could ever truly “think” in the human sense or if they will always remain tools and partners, amplifying our capabilities but constrained by our own limitations. The future might hold answers as AI continues to evolve, potentially pushing the boundaries of both human and machine knowledge.

The fact that we cannot articulate how the future of AI and humans will look like speaks volume about the limitations (or lack thereof) of the human mind. How likely is it that we are living in a pre-Galileo era and we take for granted things that will not be obvious in the future, e.g. the earth not being flat or at the centre of the universe? Will ever be a ground braking discovery so we wake up one day in a totally different world? How likely is that there is so much left to know about the universe, and what do you think it is going to be found about the universe that will change us again and forever?

Additional material worth watching/reading

- Ilya Sutskever (OpenAI) and Jensen Huang (NVIDIA CEO) video

- Pre-print paper by Chollet: On the Measure of Intelligence

Lex Fridman Podcast #38 François Chollet: Keras, Deep Learning, and the Progress of AI video Lex Fridman Podcast #367 Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI video Lex Fridman Podcast #85 Roger Penrose: Physics of Consciousness and the Infinite Universe video The Great Mental Models Farnam Street from Shane Parrish’s website Thinking, Fast and Slow book by psychologist Daniel Kahneman

You said: I have a talk in a couple of hours with a journalist interested in the AI sport applications. I wonder if you could help me discussing forefront about the topic. The difficulties I might haveis to articulate myself since I’m not native English speaker, but what I would like to point out is: AI, but what is truly intelligence? There are some traits we can “simulate” or teach a computer how to do, but some others not. In training prescription, for example, we rely on the assumption that part of the art of a coach is “computational”, which means that it is just about memory and calculations, rather than empathy or intuition. We can leverage computer to do that part only, and ultimately, we should (for efficiency). However, complex models might have a limited ability in being effectively delivering a response, because they might be limited by their construction. LLM for example, they can express ideas and principles with language, which cannot cover the resolution of the thinking space, and it might be limited by the rules of grammar. Different languages can express different things (in english you can say “dream away” as the famous writer said, but you cannot do that in Spanish). This is a silly example but it makes you understand that LLM can only learn about a portion of the representation of the world, and the text medium is either inefficient or incomplete. So I want to stress that the model itself is not the real tech advancement, but the attention should be given to the interface, i.e. the usability. How the machine can interact with the human side is more important. AI will not be solely machine because it would not be useful. Another important aspect is the presence of a training dataset, from which the model can draw. Extrapolation to non in-context information might be possibile, but cannot be ever scientific because you miss the experimentation portion of things. So either we do not believe the machine when it formulates an hypothesis or we just let it interpolate teh data and propose again what we already know. Also, the problem of the complexity of the models is not trivial. Let’s say that I have so many sensors and a model so big that can digest everything I give to understand if I’m responding well to training. One day I wake up and I do not respond well and the model is telling me that it’s because I ate carrots the Wednesday before. How is that actionable? Maybe it’s just a local minimum and I will fall again in that situation with a totally different input, i.e.: the system is chaotic. Also, I want to point out that if ever a machine could explain in simple and actionable terms an important physiological relationship, many scientists investigating that area should be quite ashamed not to be able to see that before the machine. For example, there was a nice Tweet from a person I follow on X, and his provocatory Tweet was about a model which could predict in a very easy way VO”max from the pedalling cadence of a single training session. But I say: if the solution is already there and a simple model can find it and explain it, it means that us as scientists are failing badly. So, I’m not againt AI or LLM or new tech at all, I’m just trying to see through the hype and predict where things are going to be. These techs are great but not in the sense that they can teach us somethign necessarily, but they can transform our interface with the machine making it possible to communicate more effectively and communicate differnet things faster. Synthetising information is a big part of the job. That is why I think that AThletica is one step ahead in teh game: if there is some portion of coaching which is computaitonal and automatisable, then we start from a good point because the scientific method (which is the funation of Athletica) is the most synthetisable appraoch of all. All you need to do is to encode the knowledge into a platofrm and take care of the usability, the more you are prone to this approach the better relationship with AI you will have. Also, Athletica knowledge give the advantage of the priors. The priors are those rules already known from experience or science, that can be the starting point of more complex models. For example you take the supercompensation or Banister model for training adaptations, and then you encode it, but taking into account that non-linearities can be devoted to a numerical model. The more knowldge you have about a topic, the smaller your AI model can be, as you are encoding a lot of knowldge taht require less parameters and less data for training. This is not only an efficeiny issue with energy or computationl power, but its’ due to the fact that you are less likely to fall into a local minimum trap and, almost more importantly, you will have an explainable solution to the user. ChatGPT said: ChatGPT You’re on the right track, and it sounds like you have a solid grasp of the key points you want to convey. I’ll help you refine and articulate your ideas clearly, with a focus on making your arguments persuasive and easy to understand. Here’s a structured approach you can take during your conversation with the journalist:

- Defining Intelligence and AI’s Role in Sports Opening Point: Start by questioning what we mean by “intelligence.” You can say that while AI can simulate certain aspects of human intelligence, like memory and calculations, it’s important to acknowledge that true intelligence also involves qualities like empathy, intuition, and creativity—areas where AI is still limited. Application to Sports: In sports training, for example, AI can assist with data-driven aspects of coaching, like analyzing performance metrics or prescribing training regimens based on computational models. However, the art of coaching goes beyond just data—it’s also about understanding athletes on a human level, something AI can’t fully replicate.

- Limitations of AI Models Complex Models and Communication: Explain that while AI models, including Large Language Models (LLMs), can process and express ideas within the constraints of language, they are limited by the structure of language itself. Different languages have different capacities for expression, and some nuances or concepts may be lost or misrepresented when translated across languages or distilled into computational terms. Example: Use the example of the phrase “dream away” in English, which has no direct equivalent in some other languages, to illustrate that AI’s understanding and communication are inherently limited by the data it has been trained on and the medium (text) it uses to communicate.

- Importance of Interface and Usability Key Argument: Emphasize that the real advancement in AI isn’t just in creating complex models but in how effectively these models interact with humans. The interface—the way AI communicates with and assists users—is crucial. AI must be practical and actionable, not just sophisticated. AI as a Tool: AI should be seen as a tool that enhances human capabilities rather than replaces them. It’s more about augmenting the human experience by providing better interfaces for understanding and interacting with complex data.

- Challenges with Complex Models and Data Interpretation Complexity vs. Usability: Highlight that as models become more complex, they may also become harder to interpret and less practical for real-world application. For example, if an AI model suggests that poor athletic performance is due to eating carrots a week earlier, this might not be actionable advice. Such complexity can lead to confusion rather than clarity. Chaotic Systems: Discuss the idea that sports performance is a chaotic system—small changes can have disproportionate effects, making it difficult for AI to consistently provide reliable guidance in every situation.

- The Role of Scientific Knowledge in AI Importance of Priors: Stress that AI models built with a strong foundation of scientific knowledge are more efficient and reliable. By incorporating well-established principles like the supercompensation or Banister model, AI systems can be more effective and require fewer data points to make accurate predictions. Explainability and Trust: A smaller, more focused model grounded in scientific knowledge is not only more efficient but also more explainable. This transparency is critical for users to trust AI recommendations.

- Conclusion: The Future of AI in Sports Balanced Perspective: Conclude by stating that you’re not against AI or new technologies, but you advocate for a balanced view that cuts through the hype. The future of AI in sports lies not in replacing human coaches or making groundbreaking discoveries, but in improving how we communicate and interact with data. Athletica as a Case Study: Mention Athletica as an example of how AI can be effectively integrated into sports by focusing on usability and leveraging scientific knowledge. By doing so, Athletica is able to provide actionable insights and enhance the coaching process. Final Tips: Clarity and Simplicity: Try to articulate your points clearly and avoid jargon as much as possible, especially since English isn’t your first language. Engagement: Feel free to ask the journalist questions to engage in a more dynamic conversation, and don’t hesitate to pause if you need to collect your thoughts. This structure should help you confidently navigate the discussion, ensuring you convey your points effectively. 4o You said: Thank you so much for this, can we add a brief discussion about work replacement? Many are concerned about AI taking over a lot of jobs, but I’d say that it’s only true for certain jobs, and that it’s alright if it is happening, so people can focus on other aspects of the job they are caring about. For example, I live in italy and you have the toll to pay when you’re on the highway, and that job of checking the tickets and give the change $$ is quite mechanistics and repetitive: you can “replace” with a machine. However, that working force can do soemthing else rather than checking the tickets, to make the motorway a better experience for the drivers. There are countless exampes. However, this transition cannot happen if the work force is not flexible and adaoptable. So this is a also a wake up call with which we can foressee changes on how working forces are trained for flexibility and not for doing one single job for the rest of their lives, making the job an overall better life experience for them also. This point is very important I think, because many times we confound /mix the concept of a job poosition with the need for that job or for the usefulmness of that job. We are conflating all the teachers in a job, forgetting that the objective is teh education of the nation. So, IMO, we are not focusing enough on making the workforce more flexible, but focusing on methods to keep a psoition for that workforce, as if the goal of the work is to provide people with a salry and not provide society with useful/utilty. ChatGPT said: ChatGPT That’s a very insightful point, and adding this discussion will definitely enrich your conversation with the journalist. Here’s how you can articulate this idea:

- AI and Job Replacement: A Balanced Perspective Addressing Concerns: Many people are understandably concerned about AI replacing jobs, particularly those that involve repetitive or mechanistic tasks. However, it’s important to recognize that this is not a blanket truth for all types of work. Example of Automation: Take the example of toll collectors on highways in Italy. Checking tickets and giving change is a highly repetitive job that can be efficiently automated. But the key point here is not just about replacing the job—it’s about rethinking what the workforce can do instead.

- The Opportunity for Workforce Transformation New Roles and Better Experiences: When AI or machines take over repetitive tasks, it frees up human workers to engage in more meaningful, creative, and impactful work. For instance, instead of collecting tolls, these workers could focus on improving the overall experience for drivers on the motorway—perhaps through customer service, maintenance, or even roles in traffic management and safety. Flexibility and Adaptability: This shift highlights the importance of preparing the workforce for flexibility and adaptability. Rather than training people to do a single, static job for their entire lives, we should be focusing on developing skills that allow them to transition into new roles as technology evolves.

- Rethinking the Purpose of Work Jobs vs. Utility: Often, we conflate the concept of a job position with the need for that job, forgetting that the ultimate goal is to provide value to society. For example, in the case of teachers, the goal isn’t just to employ teachers; it’s to ensure the education of the nation. Similarly, the goal of a job isn’t just to provide a salary but to contribute something useful and meaningful to society. Wake-Up Call: The rise of AI should be seen as a wake-up call for us to rethink how we prepare our workforce. We should be training people not just for specific jobs but for the ability to adapt and thrive in a rapidly changing environment. This shift in focus can lead to a better overall life experience for workers, as they can engage in work that is more fulfilling and impactful.

- Conclusion: Embracing Change for a Better Future Final Thoughts: Conclude this point by saying that while AI will inevitably lead to changes in the job market, this isn’t necessarily a bad thing. It’s an opportunity to elevate the workforce by moving people away from mundane tasks and into roles that are more aligned with their skills and passions. In the long run, this transition can lead to a more dynamic, innovative, and satisfying work environment for everyone. Additional Tips: Acknowledge Concerns: While making this point, it might be useful to acknowledge that transitions can be difficult and that there needs to be support for workers during these shifts, such as retraining programs or other forms of assistance. Focus on the Positive: Emphasize the potential benefits of this change, both for individuals and for society as a whole. This will help to frame AI not as a threat, but as a tool for positive transformation. By incorporating this discussion, you provide a nuanced view that balances the potential challenges of AI-driven job replacement with the opportunities for workforce improvement and societal benefit. 4o You said: I think it will be a nice discussion. Can you please put all the points together please? ChatGPT said: ChatGPT Certainly! Here’s a consolidated version of the points we’ve discussed, which you can use as a guide for your conversation with the journalist:

Discussion on AI in Sports and Workforce Transformation

- Defining Intelligence and AI’s Role in Sports What is True Intelligence? Begin by questioning what we really mean by “intelligence.” AI can simulate certain aspects of human intelligence, such as memory and calculations, but true intelligence also involves qualities like empathy, intuition, and creativity—areas where AI is still limited. Application to Sports: In sports, AI can assist with data-driven aspects like analyzing performance metrics or prescribing training regimens. However, the art of coaching goes beyond data—it’s also about understanding athletes on a personal level, something AI can’t fully replicate.

- Limitations of AI Models Complex Models and Communication: AI models, including Large Language Models (LLMs), can express ideas within the constraints of language, but they are limited by the structure of language itself. Different languages have different capacities for expression, which means some nuances may be lost or misrepresented. Example: Use the example of the phrase “dream away” in English, which lacks a direct equivalent in some other languages, to illustrate how AI’s understanding and communication are limited by the data and language it’s trained on.

- Importance of Interface and Usability Focus on Interface: The real advancement in AI isn’t just in creating complex models but in how effectively these models interact with humans. The usability of AI—the way it communicates and assists users—is crucial. AI should enhance human capabilities, not replace them. AI as a Tool: AI should be seen as a tool that augments human experience by providing better interfaces for understanding and interacting with complex data, making communication more efficient and effective.

- Challenges with Complex Models and Data Interpretation Complexity vs. Usability: As AI models become more complex, they may also become harder to interpret and less practical. For example, if an AI model suggests that poor athletic performance is due to eating carrots a week earlier, this advice may not be actionable and could cause confusion. Chaotic Systems: Sports performance is a chaotic system—small changes can have disproportionate effects, making it difficult for AI to consistently provide reliable guidance.

- The Role of Scientific Knowledge in AI Importance of Priors: AI models built on a foundation of scientific knowledge are more efficient and reliable. By incorporating well-established principles like the supercompensation or Banister model, AI systems can be more effective and require fewer data points for accurate predictions. Explainability and Trust: A smaller, more focused model grounded in scientific knowledge is not only more efficient but also more explainable. This transparency is essential for building trust in AI recommendations.

- AI and Job Replacement: A Balanced Perspective Addressing Concerns: While there’s concern about AI replacing jobs, it’s important to recognize that this is not true for all work. Certain repetitive and mechanistic tasks can be automated, but this opens up opportunities for workers to focus on more meaningful roles. Example of Automation: In Italy, toll collectors on highways perform repetitive tasks that can be automated. This shift allows workers to engage in roles that enhance the overall experience for drivers, such as customer service or traffic management.

- The Opportunity for Workforce Transformation New Roles and Better Experiences: When AI takes over repetitive tasks, it frees up workers to engage in more meaningful, creative, and impactful work. This transition highlights the importance of training the workforce for flexibility and adaptability, preparing them for roles that are not static but evolve with technology. Rethinking the Purpose of Work: Often, we confuse the existence of a job position with the necessity of that job, forgetting that the goal is to provide value to society. For instance, the objective isn’t just to employ teachers but to educate the nation. Similarly, the goal of a job isn’t merely to provide a salary but to contribute something useful to society.

- Conclusion: Embracing Change for a Better Future Balanced Perspective: Conclude by emphasizing that AI will lead to changes in the job market, but this is an opportunity to elevate the workforce. By automating mundane tasks, we can allow people to engage in work that is more fulfilling and impactful, leading to a more dynamic, innovative, and satisfying work environment. Support for Transition: Acknowledge that while transitions can be challenging, they also provide a chance to rethink how we train and prepare our workforce, ensuring that workers can adapt and thrive in a rapidly changing environment.

This structured approach will help you guide the conversation effectively, ensuring that you cover all your key points while maintaining a clear and engaging discussion. Good luck with your talk!

Summary from GPT (for the records)

- Defining Intelligence and AI’s Role in Sports Opening Point: Start by questioning what we mean by “intelligence.” You can say that while AI can simulate certain aspects of human intelligence, like memory and calculations, it’s important to acknowledge that true intelligence also involves qualities like empathy, intuition, and creativity—areas where AI is still limited. Application to Sports: In sports training, for example, AI can assist with data-driven aspects of coaching, like analyzing performance metrics or prescribing training regimens based on computational models. However, the art of coaching goes beyond just data—it’s also about understanding athletes on a human level, something AI can’t fully replicate.

- Limitations of AI Models Complex Models and Communication: Explain that while AI models, including Large Language Models (LLMs), can process and express ideas within the constraints of language, they are limited by the structure of language itself. Different languages have different capacities for expression, and some nuances or concepts may be lost or misrepresented when translated across languages or distilled into computational terms. Example: Use the example of the phrase “dream away” in English, which has no direct equivalent in some other languages, to illustrate that AI’s understanding and communication are inherently limited by the data it has been trained on and the medium (text) it uses to communicate.

- Importance of Interface and Usability Key Argument: Emphasize that the real advancement in AI isn’t just in creating complex models but in how effectively these models interact with humans. The interface—the way AI communicates with and assists users—is crucial. AI must be practical and actionable, not just sophisticated. AI as a Tool: AI should be seen as a tool that enhances human capabilities rather than replaces them. It’s more about augmenting the human experience by providing better interfaces for understanding and interacting with complex data.

- Challenges with Complex Models and Data Interpretation Complexity vs. Usability: Highlight that as models become more complex, they may also become harder to interpret and less practical for real-world application. For example, if an AI model suggests that poor athletic performance is due to eating carrots a week earlier, this might not be actionable advice. Such complexity can lead to confusion rather than clarity. Chaotic Systems: Discuss the idea that sports performance is a chaotic system—small changes can have disproportionate effects, making it difficult for AI to consistently provide reliable guidance in every situation.

- The Role of Scientific Knowledge in AI Importance of Priors: Stress that AI models built with a strong foundation of scientific knowledge are more efficient and reliable. By incorporating well-established principles like the supercompensation or Banister model, AI systems can be more effective and require fewer data points to make accurate predictions. Explainability and Trust: A smaller, more focused model grounded in scientific knowledge is not only more efficient but also more explainable. This transparency is critical for users to trust AI recommendations.

- Conclusion: The Future of AI in Sports Balanced Perspective: Conclude by stating that you’re not against AI or new technologies, but you advocate for a balanced view that cuts through the hype. The future of AI in sports lies not in replacing human coaches or making groundbreaking discoveries, but in improving how we communicate and interact with data. Athletica as a Case Study: Mention Athletica as an example of how AI can be effectively integrated into sports by focusing on usability and leveraging scientific knowledge. By doing so, Athletica is able to provide actionable insights and enhance the coaching process.

- AI and Job Replacement: A Balanced Perspective Addressing Concerns: Many people are understandably concerned about AI replacing jobs, particularly those that involve repetitive or mechanistic tasks. However, it’s important to recognize that this is not a blanket truth for all types of work. Example of Automation: Take the example of toll collectors on highways in Italy. Checking tickets and giving change is a highly repetitive job that can be efficiently automated. But the key point here is not just about replacing the job—it’s about rethinking what the workforce can do instead.

- The Opportunity for Workforce Transformation New Roles and Better Experiences: When AI or machines take over repetitive tasks, it frees up human workers to engage in more meaningful, creative, and impactful work. For instance, instead of collecting tolls, these workers could focus on improving the overall experience for drivers on the motorway—perhaps through customer service, maintenance, or even roles in traffic management and safety. Flexibility and Adaptability: This shift highlights the importance of preparing the workforce for flexibility and adaptability. Rather than training people to do a single, static job for their entire lives, we should be focusing on developing skills that allow them to transition into new roles as technology evolves.

- Rethinking the Purpose of Work Jobs vs. Utility: Often, we conflate the concept of a job position with the need for that job, forgetting that the ultimate goal is to provide value to society. For example, in the case of teachers, the goal isn’t just to employ teachers; it’s to ensure the education of the nation. Similarly, the goal of a job isn’t just to provide a salary but to contribute something useful and meaningful to society. Wake-Up Call: The rise of AI should be seen as a wake-up call for us to rethink how we prepare our workforce. We should be training people not just for specific jobs but for the ability to adapt and thrive in a rapidly changing environment. This shift in focus can lead to a better overall life experience for workers, as they can engage in work that is more fulfilling and impactful.

- Conclusion: Embracing Change for a Better Future Final Thoughts: Conclude this point by saying that while AI will inevitably lead to changes in the job market, this isn’t necessarily a bad thing. It’s an opportunity to elevate the workforce by moving people away from mundane tasks and into roles that are more aligned with their skills and passions. In the long run, this transition can lead to a more dynamic, innovative, and satisfying work environment for everyone.

I have a talk in a couple of hours with a journalist interested in the AI sport applications. I wonder if you could help me discussing forefront about the topic. The difficulties I might haveis to articulate myself since I’m not native English speaker, but what I would like to point out is:

AI, but what is truly intelligence? There are some traits we can “simulate” or teach a computer how to do, but some others not. In training prescription, for example, we rely on the assumption that part of the art of a coach is “computational”, which means that it is just about memory and calculations, rather than empathy or intuition. We can leverage computer to do that part only, and ultimately, we should (for efficiency).

However, complex models might have a limited ability in being effectively delivering a response, because they might be limited by their construction. LLM for example, they can express ideas and principles with language, which cannot cover the resolution of the thinking space, and it might be limited by the rules of grammar. Different languages can express different things (in english you can say “dream away” as the famous writer said, but you cannot do that in Spanish). This is a silly example but it makes you understand that LLM can only learn about a portion of the representation of the world, and the text medium is either inefficient or incomplete.

So I want to stress that the model itself is not the real tech advancement, but the attention should be given to the interface, i.e. the usability. How the machine can interact with the human side is more important. AI will not be solely machine because it would not be useful.

Another important aspect is the presence of a training dataset, from which the model can draw. Extrapolation to non in-context information might be possibile, but cannot be ever scientific because you miss the experimentation portion of things. So either we do not believe the machine when it formulates an hypothesis or we just let it interpolate teh data and propose again what we already know.

Also, the problem of the complexity of the models is not trivial. Let’s say that I have so many sensors and a model so big that can digest everything I give to understand if I’m responding well to training. One day I wake up and I do not respond well and the model is telling me that it’s because I ate carrots the Wednesday before. How is that actionable? Maybe it’s just a local minimum and I will fall again in that situation with a totally different input, i.e.: the system is chaotic.

Also, I want to point out that if ever a machine could explain in simple and actionable terms an important physiological relationship, many scientists investigating that area should be quite ashamed not to be able to see that before the machine. For example, there was a nice Tweet from a person I follow on X, and his provocatory Tweet was about a model which could predict in a very easy way VO”max from the pedalling cadence of a single training session. But I say: if the solution is already there and a simple model can find it and explain it, it means that us as scientists are failing badly.

So, I’m not againt AI or LLM or new tech at all, I’m just trying to see through the hype and predict where things are going to be. These techs are great but not in the sense that they can teach us somethign necessarily, but they can transform our interface with the machine making it possible to communicate more effectively and communicate differnet things faster. Synthetising information is a big part of the job.

That is why I think that AThletica is one step ahead in teh game: if there is some portion of coaching which is computaitonal and automatisable, then we start from a good point because the scientific method (which is the funation of Athletica) is the most synthetisable appraoch of all. All you need to do is to encode the knowledge into a platofrm and take care of the usability, the more you are prone to this <pproach the better relationship with AI you will have.

Also, Athletica knowledge give the advantage of the priors. The priors are those rules already known from experience or science, that can be the starting point of more complex models. For example you take the supercompensation or Banister model for training adaptations, and then you encode it, but taking into account that non-linearities can be devoted to a numerical model. The more knowldge you have about a topic, the smaller your AI model can be, as you are encoding a lot of knowldge taht require less parameters and less data for training. This is not only an efficeiny issue with energy or computationl power, but its’ due to the fact that you are less likely to fall into a local minimum trap and, almost more importantly, you will have an explainable solution to the user.

ChatGPT said: ChatGPT You’re on the right track, and it sounds like you have a solid grasp of the key points you want to convey. I’ll help you refine and articulate your ideas clearly, with a focus on making your arguments persuasive and easy to understand. Here’s a structured approach you can take during your conversation with the journalist:

- Defining Intelligence and AI’s Role in Sports Opening Point: Start by questioning what we mean by “intelligence.” You can say that while AI can simulate certain aspects of human intelligence, like memory and calculations, it’s important to acknowledge that true intelligence also involves qualities like empathy, intuition, and creativity—areas where AI is still limited. Application to Sports: In sports training, for example, AI can assist with data-driven aspects of coaching, like analyzing performance metrics or prescribing training regimens based on computational models. However, the art of coaching goes beyond just data—it’s also about understanding athletes on a human level, something AI can’t fully replicate.

- Limitations of AI Models Complex Models and Communication: Explain that while AI models, including Large Language Models (LLMs), can process and express ideas within the constraints of language, they are limited by the structure of language itself. Different languages have different capacities for expression, and some nuances or concepts may be lost or misrepresented when translated across languages or distilled into computational terms. Example: Use the example of the phrase “dream away” in English, which has no direct equivalent in some other languages, to illustrate that AI’s understanding and communication are inherently limited by the data it has been trained on and the medium (text) it uses to communicate.

- Importance of Interface and Usability Key Argument: Emphasize that the real advancement in AI isn’t just in creating complex models but in how effectively these models interact with humans. The interface—the way AI communicates with and assists users—is crucial. AI must be practical and actionable, not just sophisticated. AI as a Tool: AI should be seen as a tool that enhances human capabilities rather than replaces them. It’s more about augmenting the human experience by providing better interfaces for understanding and interacting with complex data.

- Challenges with Complex Models and Data Interpretation Complexity vs. Usability: Highlight that as models become more complex, they may also become harder to interpret and less practical for real-world application. For example, if an AI model suggests that poor athletic performance is due to eating carrots a week earlier, this might not be actionable advice. Such complexity can lead to confusion rather than clarity. Chaotic Systems: Discuss the idea that sports performance is a chaotic system—small changes can have disproportionate effects, making it difficult for AI to consistently provide reliable guidance in every situation.

- The Role of Scientific Knowledge in AI Importance of Priors: Stress that AI models built with a strong foundation of scientific knowledge are more efficient and reliable. By incorporating well-established principles like the supercompensation or Banister model, AI systems can be more effective and require fewer data points to make accurate predictions. Explainability and Trust: A smaller, more focused model grounded in scientific knowledge is not only more efficient but also more explainable. This transparency is critical for users to trust AI recommendations.

- Conclusion: The Future of AI in Sports Balanced Perspective: Conclude by stating that you’re not against AI or new technologies, but you advocate for a balanced view that cuts through the hype. The future of AI in sports lies not in replacing human coaches or making groundbreaking discoveries, but in improving how we communicate and interact with data. Athletica as a Case Study: Mention Athletica as an example of how AI can be effectively integrated into sports by focusing on usability and leveraging scientific knowledge. By doing so, Athletica is able to provide actionable insights and enhance the coaching process. Final Tips: Clarity and Simplicity: Try to articulate your points clearly and avoid jargon as much as possible, especially since English isn’t your first language. Engagement: Feel free to ask the journalist questions to engage in a more dynamic conversation, and don’t hesitate to pause if you need to collect your thoughts. This structure should help you confidently navigate the discussion, ensuring you convey your points effectively.

You said: Thank you so much for this, can we add a brief discussion about work replacement?

Many are concerned about AI taking over a lot of jobs, but I’d say that it’s only true for certain jobs, and that it’s alright if it is happening, so people can focus on other aspects of the job they are caring about. For example, I live in italy and you have the toll to pay when you’re on the highway, and that job of checking the tickets and give the change $$ is quite mechanistics and repetitive: you can “replace” with a machine. However, that working force can do soemthing else rather than checking the tickets, to make the motorway a better experience for the drivers. There are countless exampes. However, this transition cannot happen if the work force is not flexible and adaoptable. So this is a also a wake up call with which we can foressee changes on how working forces are trained for flexibility and not for doing one single job for the rest of their lives, making the job an overall better life experience for them also.

This point is very important I think, because many times we confound /mix the concept of a job poosition with the need for that job or for the usefulmness of that job. We are conflating all the teachers in a job, forgetting that the objective is teh education of the nation. So, IMO, we are not focusing enough on making the workforce more flexible, but focusing on methods to keep a psoition for that workforce, as if the goal of the work is to provide people with a salry and not provide society with useful/utilty. ChatGPT said: ChatGPT That’s a very insightful point, and adding this discussion will definitely enrich your conversation with the journalist. Here’s how you can articulate this idea:

- AI and Job Replacement: A Balanced Perspective Addressing Concerns: Many people are understandably concerned about AI replacing jobs, particularly those that involve repetitive or mechanistic tasks. However, it’s important to recognize that this is not a blanket truth for all types of work. Example of Automation: Take the example of toll collectors on highways in Italy. Checking tickets and giving change is a highly repetitive job that can be efficiently automated. But the key point here is not just about replacing the job—it’s about rethinking what the workforce can do instead.

- The Opportunity for Workforce Transformation New Roles and Better Experiences: When AI or machines take over repetitive tasks, it frees up human workers to engage in more meaningful, creative, and impactful work. For instance, instead of collecting tolls, these workers could focus on improving the overall experience for drivers on the motorway—perhaps through customer service, maintenance, or even roles in traffic management and safety. Flexibility and Adaptability: This shift highlights the importance of preparing the workforce for flexibility and adaptability. Rather than training people to do a single, static job for their entire lives, we should be focusing on developing skills that allow them to transition into new roles as technology evolves.

- Rethinking the Purpose of Work Jobs vs. Utility: Often, we conflate the concept of a job position with the need for that job, forgetting that the ultimate goal is to provide value to society. For example, in the case of teachers, the goal isn’t just to employ teachers; it’s to ensure the education of the nation. Similarly, the goal of a job isn’t just to provide a salary but to contribute something useful and meaningful to society. Wake-Up Call: The rise of AI should be seen as a wake-up call for us to rethink how we prepare our workforce. We should be training people not just for specific jobs but for the ability to adapt and thrive in a rapidly changing environment. This shift in focus can lead to a better overall life experience for workers, as they can engage in work that is more fulfilling and impactful.

- Conclusion: Embracing Change for a Better Future Final Thoughts: Conclude this point by saying that while AI will inevitably lead to changes in the job market, this isn’t necessarily a bad thing. It’s an opportunity to elevate the workforce by moving people away from mundane tasks and into roles that are more aligned with their skills and passions. In the long run, this transition can lead to a more dynamic, innovative, and satisfying work environment for everyone. Additional Tips: Acknowledge Concerns: While making this point, it might be useful to acknowledge that transitions can be difficult and that there needs to be support for workers during these shifts, such as retraining programs or other forms of assistance. Focus on the Positive: Emphasize the potential benefits of this change, both for individuals and for society as a whole. This will help to frame AI not as a threat, but as a tool for positive transformation. By incorporating this discussion, you provide a nuanced view that balances the potential challenges of AI-driven job replacement with the opportunities for workforce improvement and societal benefit.

You said: I think it will be a nice discussion. Can you please put all the points together please? ChatGPT said: ChatGPT Certainly! Here’s a consolidated version of the points we’ve discussed, which you can use as a guide for your conversation with the journalist:

Discussion on AI in Sports and Workforce Transformation

- Defining Intelligence and AI’s Role in Sports What is True Intelligence? Begin by questioning what we really mean by “intelligence.” AI can simulate certain aspects of human intelligence, such as memory and calculations, but true intelligence also involves qualities like empathy, intuition, and creativity—areas where AI is still limited. Application to Sports: In sports, AI can assist with data-driven aspects like analyzing performance metrics or prescribing training regimens. However, the art of coaching goes beyond data—it’s also about understanding athletes on a personal level, something AI can’t fully replicate.

- Limitations of AI Models Complex Models and Communication: AI models, including Large Language Models (LLMs), can express ideas within the constraints of language, but they are limited by the structure of language itself. Different languages have different capacities for expression, which means some nuances may be lost or misrepresented. Example: Use the example of the phrase “dream away” in English, which lacks a direct equivalent in some other languages, to illustrate how AI’s understanding and communication are limited by the data and language it’s trained on.

- Importance of Interface and Usability Focus on Interface: The real advancement in AI isn’t just in creating complex models but in how effectively these models interact with humans. The usability of AI—the way it communicates and assists users—is crucial. AI should enhance human capabilities, not replace them. AI as a Tool: AI should be seen as a tool that augments human experience by providing better interfaces for understanding and interacting with complex data, making communication more efficient and effective.

- Challenges with Complex Models and Data Interpretation Complexity vs. Usability: As AI models become more complex, they may also become harder to interpret and less practical. For example, if an AI model suggests that poor athletic performance is due to eating carrots a week earlier, this advice may not be actionable and could cause confusion. Chaotic Systems: Sports performance is a chaotic system—small changes can have disproportionate effects, making it difficult for AI to consistently provide reliable guidance.

- The Role of Scientific Knowledge in AI Importance of Priors: AI models built on a foundation of scientific knowledge are more efficient and reliable. By incorporating well-established principles like the supercompensation or Banister model, AI systems can be more effective and require fewer data points for accurate predictions. Explainability and Trust: A smaller, more focused model grounded in scientific knowledge is not only more efficient but also more explainable. This transparency is essential for building trust in AI recommendations.

- AI and Job Replacement: A Balanced Perspective Addressing Concerns: While there’s concern about AI replacing jobs, it’s important to recognize that this is not true for all work. Certain repetitive and mechanistic tasks can be automated, but this opens up opportunities for workers to focus on more meaningful roles. Example of Automation: In Italy, toll collectors on highways perform repetitive tasks that can be automated. This shift allows workers to engage in roles that enhance the overall experience for drivers, such as customer service or traffic management.

- The Opportunity for Workforce Transformation New Roles and Better Experiences: When AI takes over repetitive tasks, it frees up workers to engage in more meaningful, creative, and impactful work. This transition highlights the importance of training the workforce for flexibility and adaptability, preparing them for roles that are not static but evolve with technology. Rethinking the Purpose of Work: Often, we confuse the existence of a job position with the necessity of that job, forgetting that the goal is to provide value to society. For instance, the objective isn’t just to employ teachers but to educate the nation. Similarly, the goal of a job isn’t merely to provide a salary but to contribute something useful to society.

- Conclusion: Embracing Change for a Better Future Balanced Perspective: Conclude by emphasizing that AI will lead to changes in the job market, but this is an opportunity to elevate the workforce. By automating mundane tasks, we can allow people to engage in work that is more fulfilling and impactful, leading to a more dynamic, innovative, and satisfying work environment. Support for Transition: Acknowledge that while transitions can be challenging, they also provide a chance to rethink how we train and prepare our workforce, ensuring that workers can adapt and thrive in a rapidly changing environment. This structured approach will help you guide the conversation effectively, ensuring that you cover all your key points while maintaining a clear and engaging discussion. Good luck with your talk!

You said: What would you say is to think together with a machine? I mean, if you ask me a question about: 10 people were on the bus, half of them left at the first station and 12 come up; at the second station 3 came down and 7 went up, etc. I can solve this problem doing a calculation, or doing the math in my mind. In the first case, was I thinking together with a machine? If I did not have a notion of what a bus was, and I looked up at Wikipedia to know, was I thinking together with a machine or I was just using it to make calculations? Obvously the calculator would know nothing about busses, would it? Can you please give me your point of view? ChatGPT said: ChatGPT This is a fascinating question that delves into the nature of human-machine interaction and the concept of “thinking together” with a machine. Let me offer my perspective.

Human-Machine Collaboration vs. Pure Calculation When you solve a problem using a calculator or a computer, you’re engaging in a form of collaboration with the machine, but it’s a limited and specific type of collaboration. Let’s break down the different scenarios you mentioned:

Calculating the Bus Problem:

Pure Calculation: If you use a calculator or software to perform the arithmetic needed to solve the bus problem (e.g., adding and subtracting the number of people at each station), you’re not really “thinking together” with the machine in a deep sense. Instead, you’re offloading a specific, well-defined task to the machine. The machine is doing something that it excels at—performing precise, rapid calculations—while you retain the understanding of the problem’s context (e.g., the concept of people getting on and off a bus). Human Oversight: You are still the one conceptualizing the problem, framing it, and interpreting the results. The machine is a tool that helps with the mechanical part of the process, but it doesn’t contribute to understanding the problem itself. Looking Up Information on Wikipedia: